Instrument and -Phase Detection in Cholocystectomy

Surgeons, who perform endoscopic procedures, often complain about the lack of support of an efficient retrieval of recordings from large scale medical multimedia databases. For instance, a surgeon may want to show a particular part of the surgery to a patient. Since recordings may be up to a few hours long and there is usually no metadata available that describes the content of a recording, the surgeon has to skim through the video to find the desired scene. However, this is a tedious and time-consuming task. One step to support surgeons is to temporally segment a long recording into semantic meaningful phases. This allows surgeons to use such recordings efficiently in their everyday work.

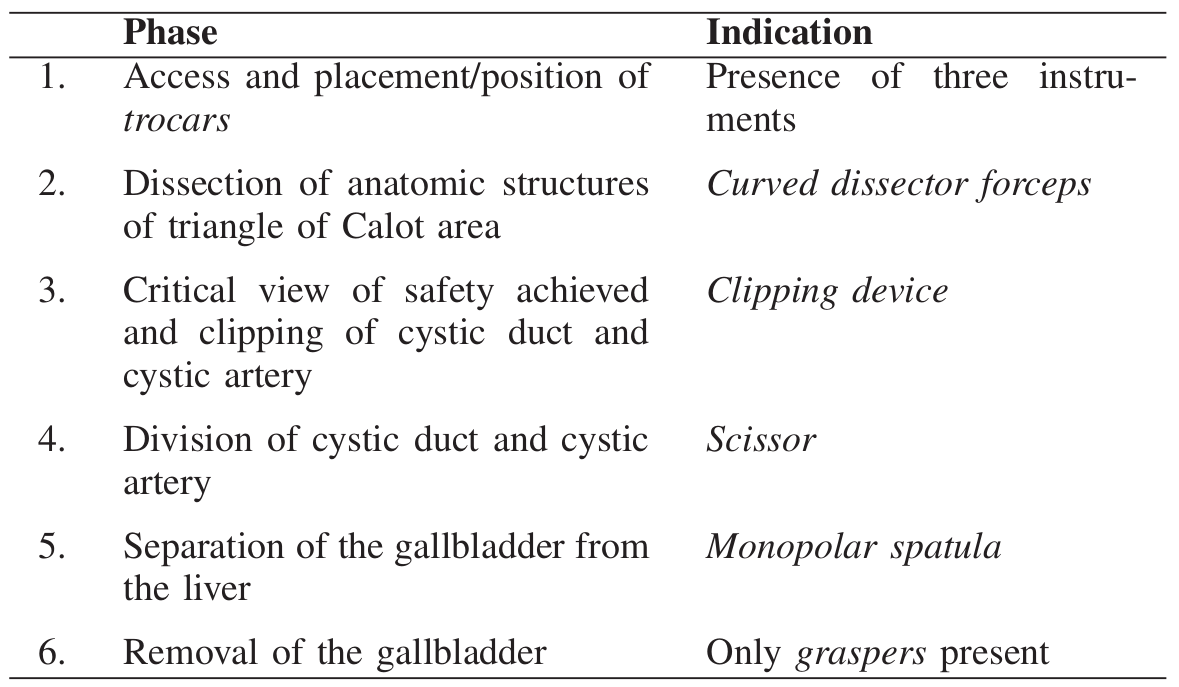

Content segmentation in the context of laparoscopic videos is a challenging task due to the special characteristics of such video data. Common shot detection methods used in other domains do not work. Thus, we propose to use instrument classification, to enable a semantic segmentation of laparoscopic videos. The basic step for scene segmentation in an endoscopic video is the reliable detection of particular phases of an intervention. For laparoscopy this can be achieved via the recognition of surgery instruments. In particular, the appearance of one instrument, or a certain combination of instruments, signals the beginning of a new phase. In close cooperation with medical experts, we identified dependencies between six single workflow steps and instruments for cholecystectomy, i.e., the removal of the gallbladder. Thus, with the help of instrument classification for laparoscopic videos [1] we can segment laparoscopic videos into surgical phases [2].

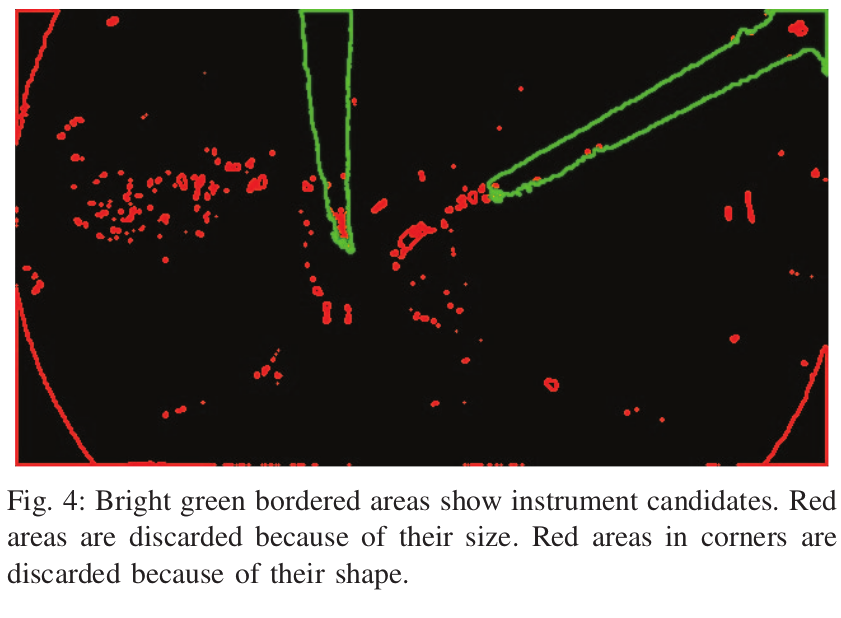

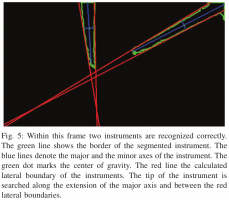

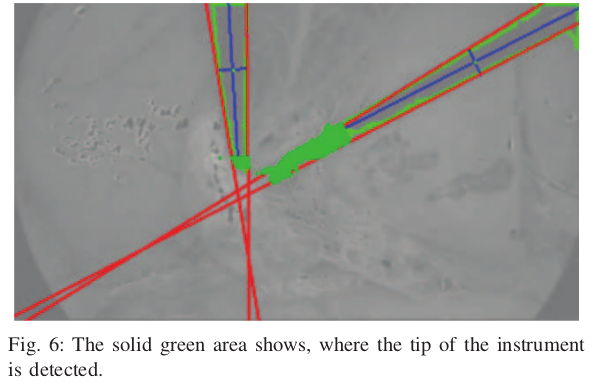

Our instrument classification approach contains three steps. First, regions where instruments appear are separated from regions which contain no instrument pixels. Second, the identified instrument regions are classified. Third, the classified objects are assigned to their corresponding phase. We use color to distinguish between instrument and non-instrument regions. In particular, an examination of different color spaces have shown that the CIE L*a*b color space works better than RGB or HSV. Based on a histogram for the a* component, we separate instrument and non-instrument pixels in order to detect the contour of an instrument. However, this method only detects the shaft of an instrument reliably but not the tip which is usually more characteristic. Thus, we further apply image moments [3] and several heuristics to recognize the tips of the instruments.

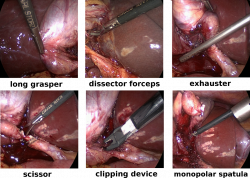

After an instrument has been detected within a frame, the next step of our algorithm is the identification of the specific type of instrument. We use the Bag-of-visual-Words (BoW) [4] and Support Vector Machine (SVM) [5] approaches for this task. Based on an evaluation of several local image features, feature oriented robust BRIEF (ORB) [6] has been chosen. In particular, we build a vocabulary of size 2048 using ORB features and k-means clustering. As input we only use ORB features that are located on pixel areas showing only instruments. The SVM model is built as binary one-versus-all classifier. Six classifiers are built to identify the six instruments that are used in the case of a cholecystectomy: short/long grasper, curved dissector forceps, clipping device, scissor and monopolar spatula.

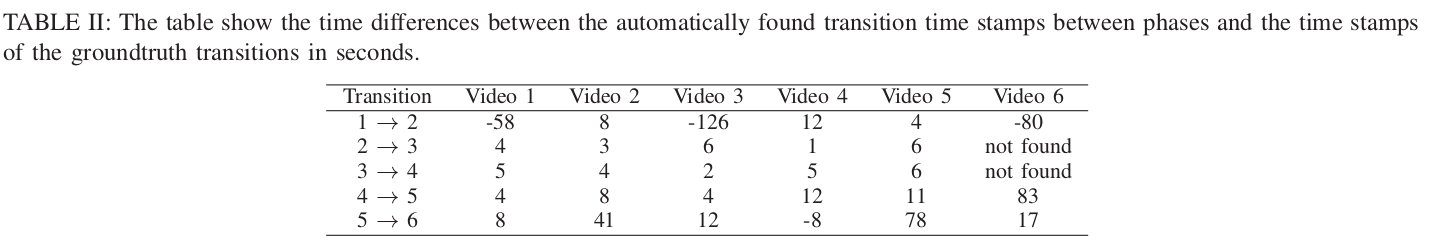

For the evaluation of our classification and phase detection approach we use a custom dataset. The dataset contains six cholecystectomies and has an overall length of more than three hours. With the help of a medical expert, we annotated all videos manually according to the phases mentioned above. According to the medical expert, the transitions between consecutive phases cannot be set precisely to seconds. Thus, as a rule of thumb, a difference between the detected and the actual phase transition of about five to ten seconds is perceived as acceptable.

Evaluations results show that our approach can detect the borders of most phases and also achieves a precision of ten seconds. Only two phases could not be detected which are both part of the same video. The reason for this is the bad video quality of the recording.

Phase detection is one step in order to improve the efficiency of surgeons when browsing collections of endoscopic videos. The evaluation results show that borders between different phases can be detected reliably by our proposed algorithm. As future work we want to evaluate our approach with the help of surgeons and also evaluate how the approach performs with other types of endoscopic videos.

References

-

M.J. Primus, K. Schöffmann, L. Böszörmenyi, “Instrument Classification in Laparoscopic Videos,” In Proc. Int. Workshop on Content-Based Multimedia Indexing (CBMI), 2015, pp. 1-6.

- M.J. Primus, K. Schöffmann, L. Böszörmenyi, “Temporal Segmentation of Laparoscopic Videos into Surgical Phases,” In Proc. Int. Workshop on Content-Based Multimedia Indexing (CBMI), 2016, pp. 1-6.

- R. Stauder, A. Okur, L. Peter, A. Schneider, M. Kranzfelder, H. Feussner, and N. Navab, “Random forests for phase detection in surgical workflow analysis,” in Information Processing in Computer-Assisted Interventions. Springer, 2014, pp. 148–157.

- G. Csurka, C. Dance, L. Fan, J. Willamowski, and C. Bray, “Visual categorization with bags of keypoints,” in Proc. of the Workshop on Statistical Learning in Computer Vision, ECCV, vol. 1, no. 1-22., 2004, pp. 1–2.

- C. Cortes and V. Vapnik, “Support-vector networks,” Machine learning, vol. 20, no. 3, pp. 273–297, 1995.

- Rublee, V. Rabaud, K. Konolige, and G. Bradski, “Orb: An Efficient Alternative to SIFT or SURF,” in Proc. of the IEEE Int. Conf. on Computer Vision (ICCV), 2011, pp. 2564–2571.